Starter

Designed for regular creators who need more credits and flexibility to explore OmniHuman 1.5 features.

- First-time users

- short clips

- personal projects.

- HD video generation

- lip-sync & body animation

- download enabled

- email support

Film-Grade Digital Humans From One Photo

Turn a single image and voice into a lifelike digital actor with real lip-sync, emotional performance, and cinematic motion. Create talking, singing, and storytelling videos that feel truly alive.

No prompts needed. Upload a photo and voice.

Click to upload image

Login required

Use JPG for best results — clearer inputs produce better outputs.

Login required

Describe the desired video effect or animation style

Click to preview the power of AI character animation

Credit Calculation: 1 credit per second of audio (rounded up)

No audio = 0 credits

Turn a single photo and voice into film-grade digital performances in minutes.

Upload a clear portrait to start your AI digital human.

Works with real people, anime characters, and pets.

Upload a voice clip or song to drive emotion and lip-sync.

OmniHuman 1.5 understands tone, rhythm, and expression automatically.

Create a film-grade AI avatar video with realistic lip-sync, emotion, and motion.

Preview, fine-tune with simple text prompts, and download your final performance.

See how a single photo and voice become film-grade AI performances with real lip-sync, emotional acting, and cinematic motion.

From a single photo and voice, OmniHuman 1.5 delivers film-grade AI performances with real emotion, rhythm, and intent — not just animation.

Upload multiple reference images and generate several outputs in one go, boosting your creative workflow. Responds seamlessly to text prompts, giving you fine control over object generation, camera movements, and specific actions.

Generate dynamic group dialogues and ensemble performances by routing separate audio tracks to the correct characters in a single frame.

Transforms static images into expressive, high-quality videos by syncing lip movements, emotions, and gestures with audio.

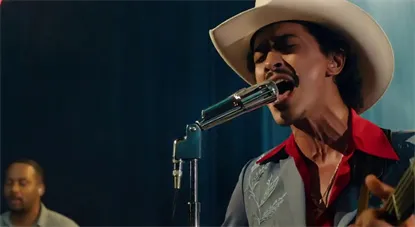

Creates soulful digital singers with expressive motion, natural pauses, and adaptability across genres — from intimate ballads to energetic concerts, even handling high-pitched performances with ease.

Interprete audio's context to drive natural gestures and authentic emotional shifts. It creates digital actors that align words with intent—delivering expressive, cinematic performances with full dramatic range.

From vocals to emotions to intent. OmniHuman 1.5 performs like a real actor.

Create emotional digital singers from one photo and a song.

Beyond lip-sync with natural pauses, breathing, and rhythm for styles from soft ballads to high-energy concerts.

Bring a single photo to life with audio-driven emotions.

Delivers cinematic acting with a full range of expressions, from calm sincerity to intense drama — no text prompt needed.

Understands meaning, not just sound.

Actions and expressions align with spoken intent for realistic, intentional character behavior.

Audio + text for precise direction.

Guide camera motion, actions, and style while maintaining perfect lip-sync and performance coherence.

From solo acting to duet and group scenes.

Routes each voice to the right character and enables natural interaction in shared frames.

Works across humans, anime, stylized characters, and even pets.

Consistent expression and motion across different visual styles.

Create film-grade AI digital humans for storytelling, music, content creation, and virtual communication — all from a single photo and voice.

Turn photos into emotional AI singers with rhythm, breath, and stage presence.

Generate dramatic digital actors from still portraits for shorts, scenes, trailers, and character arcs.

Create natural talking avatars for announcements, product explainers, or branded personalities.

Stage duo and group conversations with accurate voice routing and interaction.

Animate VTuber models or anime portraits with real emotional depth and lip-sync.

Bring stylized characters and pets to life with voice-driven emotion and motion.

Explain topics, simulate role-play, or deliver personalized lessons with digital instructors.

Discover OmniHuman 1.5 AI pricing with flexible plans: Starter, Creator, and Pro Studio. Unlock cinematic AI video creation with immersive sound. Start your OmniHuman AI Video plan today!

Designed for regular creators who need more credits and flexibility to explore OmniHuman 1.5 features.

Professional-grade plan with higher credit limits, ideal for advanced users and content creators.

🎁 +5 bonus credits · Save 8.33%All-inclusive plan with maximum credits, built for studios and businesses with heavy video needs.

🎁 +50 bonus credits · Save 31.25%OmniHuman 1.5 is a film-grade digital human model in the OmniHuman series that turns one photo and audio into realistic lip-sync, emotional acting, and cinematic video.

Upload a single photo, add voice or music, and generate. OmniHuman 1.5 will produce a lifelike performance with real lip-sync, emotion, and cinematic motion. Optional text prompts can refine actions and camera direction.

Yes. You can use generated videos for commercial work, including marketing, content creation, and client projects. You are responsible for ensuring image and audio rights for uploaded materials.

OmniHuman uses a credit-based system. You only pay for the videos you generate. Credits never expire and can be purchased as needed. No subscription required.

Talking avatars, singing performances, cinematic acting, character storytelling, VTuber content, multi-character scenes, and anime or pet animations — all from one photo and voice.

For assistance, email us at support@omnihuman-15.com. Failed generations are automatically refunded.

Create lifelike digital humans with real lip-sync, emotion, and cinematic motion — no animation skills needed.

Create Your Digital Human