Improve OmniHuman 1.5 Talking Avatars: Better Lip Sync, Less Face Drift, More Realism

If you’re searching for how to improve OmniHuman 1.5 talking avatars, you’re probably seeing one of these issues:

The lip sync feels slightly late or “floaty”

The face changes over time (face drift / identity drift)

Expressions look stiff or uncanny (the classic uncanny valley problem)

Gestures are too big and make the avatar feel “AI”

This guide is a practical, repeatable workflow for photo + audio → talking head video production. It focuses on the details that improve realism and conversion: accurate lip sync, stable identity, micro-expressions, and controlled motion.

✅ Start on OmniHuman-15: Generate a talking avatar now →

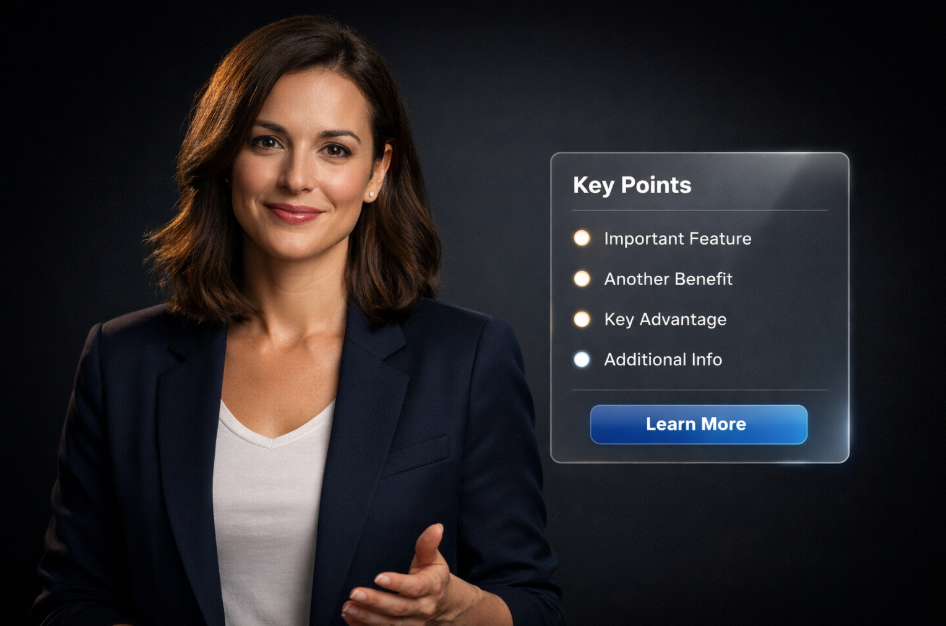

Example Showcase (Replace With Your Real Media)

Example 1 — UGC Product Demo Spokesperson (Talking Head)

Example 2 — Education Explainer (AI Teacher Avatar)

Example 3 — News Anchor / AI Presenter (Broadcast Style)

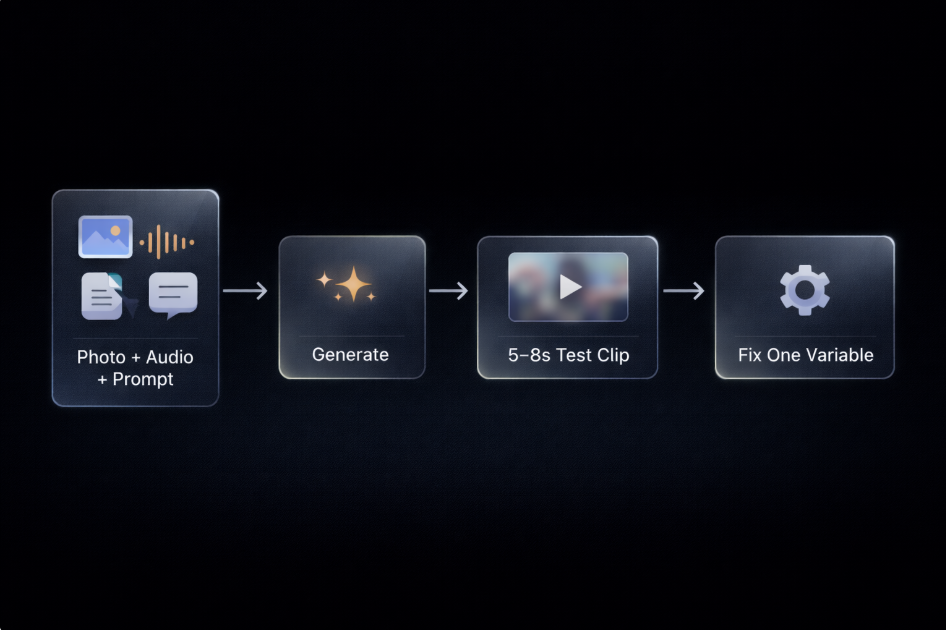

Workflow Diagram (Photo + Audio → Better Results)

Why lip sync “looks wrong” even when the mouth is moving

Many people assume lip sync is a single setting. In reality, viewers judge lip sync with multiple signals:

1) Lip closure timing (p/b/m)

If the lips don’t fully close on p/b/m, your talking avatar instantly feels synthetic. This is the fastest “AI tell.”

2) Consonant clarity (t/k/s)

Noisy or clipped audio blurs consonants, and the mouth motion becomes vague. If you’re Googling how to make lip sync more accurate, start with audio cleanup.

3) Teeth stability

Teeth “popping” in and out across frames usually comes from unstable identity constraints, over-expressive prompts, or a poor source portrait.

4) Eye behavior (blinks + focus shifts)

People forgive small lip sync imperfections, but they rarely forgive dead-stare eyes. Natural blinks and subtle focus shifts help reduce uncanny valley.

Key idea: Better lip sync is often not a mouth problem—it’s an input quality and motion control problem.

The proven workflow: better lip sync, less face drift, more realism

✅ Try the workflow on OmniHuman-15: Open the generator →

This workflow targets long-tail searches like:

how to improve lip sync in OmniHuman 1.5

how to fix face drift / identity drift

how to reduce uncanny valley in AI talking avatars

best prompts for realistic talking head videos

Step 1 — Choose the best photo (identity stability starts here)

For photo to talking avatar results, your input portrait is a “model anchor.” Use this checklist:

Use

Front-facing or slight 10–20° angle

Even soft lighting (avoid hard shadows across lips)

Clear mouth region (no hands, no hair covering)

Natural expression (neutral or slight smile)

Simple background

Avoid

Sunglasses, masks, heavy occlusion

Extreme side profile

Low-resolution face crops

Strong shadow line across lips

Why it reduces face drift:

The cleaner the facial geometry and mouth region, the less the model needs to guess. That reduces identity instability and texture warping over time.

Step 2 — Clean your audio (the #1 lip sync multiplier)

If lip sync feels late/floaty, do this before regenerating:

Trim long silence at the start/end

Reduce background noise (hiss, room tone)

Normalize loudness (avoid clipping)

Keep a natural speaking pace

Avoid music that competes with the voice

✅ Generate with photo + audio here: Start now →

Step 3 — Use “director prompts” (short prompts outperform long prompts)

Prompts should not be poetry. They should be constraints.

Director prompt formula

Role + framing + emotion level + gesture limit + camera + lighting + “accurate lip sync”

Safe baseline prompt (copy/paste)

“Realistic AI talking avatar, medium shot, steady camera, clean background. Accurate lip sync, subtle micro-expressions, minimal gestures, soft studio lighting.”

When gestures are too big (add this line)

“Minimal gestures, controlled expression, no exaggerated motion.”

✅ Paste your prompt and generate: Open OmniHuman-15 →

Step 4 — Always run a 5–8 second test clip first

This is the fastest way to stop wasting time.

Check these five things:

p/b/m lip closure

teeth stability

face drift (does identity shift?)

eye behavior (natural blinks)

gesture intensity

Step 5 — Fix by changing ONE variable at a time (pro debugging)

This method targets long-tail searches like how to fix face drift and how to stop uncanny avatar motion.

Lip sync off → change audio only

Face drift → change photo only

Overacting → change prompt only

Framing wrong → change camera words only (“close-up” / “medium shot”)

Background too busy → simplify background / “clean background”

Micro-expressions: the realism lever most people ignore

If you want a talking avatar that “feels human,” you need micro-expressions that match meaning:

slight eyebrow raise on a key point

soft smile near the CTA

calm confidence during explanation

small pause before a conclusion

Important: micro-expressions should be subtle. If you push emotion too hard, the model compensates with bigger motion and you get uncanny valley.

Sentence-level emotion mapping (easy + consistent)

Map emotion to sentences, not individual words:

Sentence 1: calm confidence

Sentence 2: slight emphasis

Sentence 3: relief/clarity

CTA: friendly certainty

Use cases that drive clicks and conversions (and what to optimize)

1) UGC Ads / Product Demo Spokesperson Video

Traffic intent: UGC talking avatar, product demo spokesperson video

Best length: 20–30 seconds

Script structure

0–2s Hook: pain/outcome

3–10s Proof: what you did

11–22s Benefits: 2–3 bullets

23–30s CTA: one action

✅ Generate a UGC-style talking avatar: Try OmniHuman-15 →

2) Education Explainer (AI Teacher Avatar)

Traffic intent: education talking head generator, AI teacher avatar

Best length: 20–40 seconds

Structure

“In 20 seconds…”

3 points

one takeaway line

✅ Create an explainer avatar here: Open OmniHuman-15 →

3) News Anchor / AI Presenter

Traffic intent: news anchor AI presenter, AI presenter video

Best length: 10–25 seconds

Structure

3 updates

1 highlight

✅ Generate a news-style presenter: Start now →

Fast troubleshooting (save this checklist)

Lip sync feels late / floaty

Clean noise, normalize volume

Avoid clipping

Slow speech slightly

Short test clip first

Keep prompts short

Face drift / identity drift

Use a clearer portrait (front-facing, even light)

Avoid occlusion on mouth region

Simplify background

Reduce prompt intensity

Use shorter clips, then scale

Uncanny valley / “too AI”

Add “subtle micro-expressions, natural blinks”

Remove emotional extremes (“dramatic”, “energetic”)

Reduce gestures and camera movement words

FAQ (Long-tail keyword capture)

How do I improve lip sync in OmniHuman 1.5?

Clean the audio first (noise + clipping), then use a short director prompt and validate with a 5–8 second test clip before rendering longer videos.

How do I fix face drift / identity drift in talking avatars?

Replace the input portrait with a well-lit, front-facing photo, reduce occlusion near the mouth, simplify backgrounds, and avoid long, overly expressive prompts.

What is the best prompt for a realistic talking head video?

Use a short constraint-based prompt: medium shot, steady camera, accurate lip sync, subtle micro-expressions, and minimal gestures.

How do I reduce uncanny valley in AI talking avatars?

Focus on eye behavior (natural blinks), subtle micro-expressions, steady framing, clean audio, and limited gestures.

Ready to generate?

If you want the fastest path to a “human-feeling” result:

Upload a clean portrait

Upload clean audio

Paste the baseline director prompt

Run a 5–8 second test

Fix one variable

Export the full clip

✅ Start on OmniHuman-15: Generate your first clip now →

Related Articles

What is OmniHuman 1.5? Exploring the Next Generation of AI Avatar

OmniHuman 1.5 by ByteDance generates semantically coherent and expressive character animations, creating virtual humans with early form of cognition and mind.

Turn One Photo Into a Talking Human Video — Meet OmniHuman

OmniHuman transforms a single photo into a realistic talking human video with natural lipsync, expressions, and smooth motion. Perfect for creators, businesses, and anyone who wants high-quality videos without filming.