What is OmniHuman 1.5? Exploring the Next Generation of AI Avatar

OmniHuman 1.5 is an innovative framework by ByteDance that can generate vivid character animations from just a single image and one audio track, synchronized with the speech’s rhythm, prosody, and semantic content.

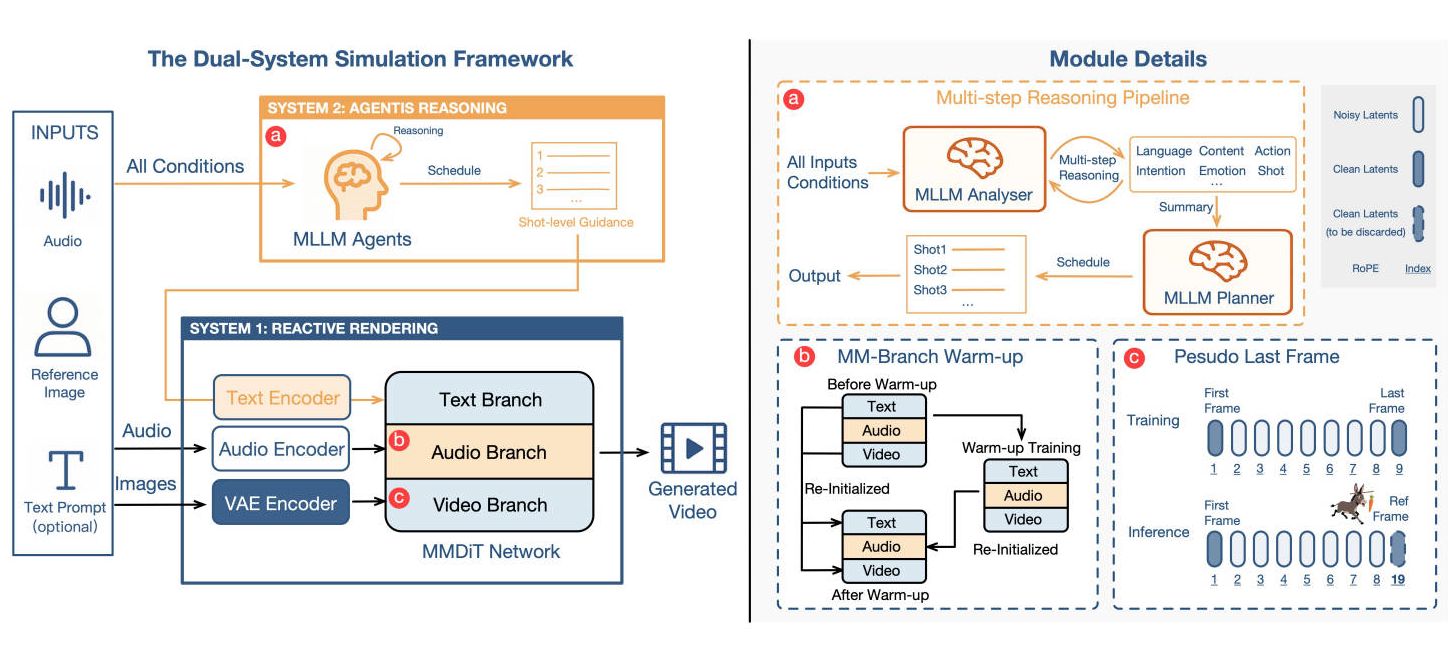

Unlike traditional virtual human models, which often focus on reactive tasks like lip-sync or rhythm-based gestures, OmniHuman 1.5 simulates both intuitive reactions and thoughtful planning, creating a virtual human with an early form of “cognition” or “mind”.

The Core Innovation: Dual-System Theory in Virtual Humans

The foundation of OmniHuman 1.5 is inspired by Daniel Kahneman’s Dual-System Theory, which describes two dominant modes of human cognition:

System 1: Fast, intuitive, and unconscious reactions, like turning your head instantly when hearing a sound.

System 2: Slow, deliberate, and logical thinking, such as strategizing while playing chess.

Existing virtual human models can be seen as System 1-only. They handle reactive tasks efficiently but lack context-aware reasoning, resulting in repetitive or monotonous behavior. OmniHuman 1.5 introduces a dual-system frame that combines reactive responses with high-level planning for richer, context-aware animations.

System 1: MM-DiT for Reactive Rendering

The MM-DiT (Multimodal Diffusion Transformer) executes the high-level plans from System 2, acting as the fast, reactive System 1. It integrates the action schedule with raw audio and image information to generate high-quality, expressive video output.

Key innovations in MM-DiT include:

Multimodal DiT Architecture: Independent symmetrical branches for audio and video to handle multi-modal fusion efficiently.

Pseudo-Final-Frame Design: During inference, a reference image is placed at the “last frame” to guide identity consistency without constraining the motion dynamics.

This design ensures characters maintain identity fidelity while allowing freedom of motion, resolving the typical trade-off between character consistency and animation flexibility.

System 2: MLLM Agent as the “Thinking Brain”

To emulate System 2, OmniHuman 1.5 introduces a Multimodal Large Language Model (MLLM) Agent, which serves as the character’s planning brain. It performs two key functions:

Analyze: The MLLM analyzer receives all input data, images, audio and uses a chain-of-thought reasoning to extract essential information about the character’s identity, emotions, intentions, dialogue, and environment.

Plan: The MLLM planner takes the analysis output and generates a structured “action schedule” in textual form, detailing what actions the character should perform at specific time frames (e.g., “At second 5, the character should display a surprised expression and raise their hand”).

This action schedule acts as the character’s semantic “soul”, guiding behavior beyond mere reactive motion.

Applications of OmniHuman 1.5

Context-Aware Audio-Driven Animation

Generate characters that move naturally with speech, expressing genuine emotions and matching gestures to intent. Ideal for music and emotive performances.

Multi-Person Scene Performance

Create dynamic group interactions and ensemble scenes by routing multiple audio tracks to the correct characters.

Robust and Diverse Input Support

Produce high-quality, synchronized videos across themes, from realistic animals to anthropomorphic and stylized cartoon characters.

How to Use OmniHuman 1.5

1. Upload Images and Audio:

Provide reference images(JPG, PNG, WEBP, GIF) and audio(MP3, OGG, WAV, M4A).

2. Generate Action Schedule:

The MLLM Agent analyzes the inputs and produces a structured, semantic action plan for the character.

3. Render Video:

MM-DiT synthesizes the final video, combining reactive motion with the planned schedule for seamless, expressive animation.

4. Preview & Share:

View the output video and download for share.

OmniHuman 1.5 enables virtual humans to act as performers, not just players, opening the door for next-generation AI-driven digital humans with enhanced intelligence, empathy, and contextual awareness.

Experience OmniHuman 1.5 and explore how it transforms virtual human creation, from simple imitation to truly lifelike, context-aware performance. Start creating your intelligent virtual characters today!

Reference: OmniHuman-1.5: Instilling an Active Mind in Avatars via Cognitive Simulation

Related Articles

Improve OmniHuman 1.5 Talking Avatars: Better Lip Sync, Less Face Drift, More Realism

A practical OmniHuman 1.5 playbook to improve lip sync accuracy, reduce face/identity drift, and make talking head videos feel human with repeatable steps and prompts.

Turn One Photo Into a Talking Human Video — Meet OmniHuman

OmniHuman transforms a single photo into a realistic talking human video with natural lipsync, expressions, and smooth motion. Perfect for creators, businesses, and anyone who wants high-quality videos without filming.